Matrix multiplication

In mathematics, matrix multiplication is the operation of multiplying a matrix with either a scalar or another matrix. This article gives an overview of the various types of matrix multiplication.

Contents |

Matrix product

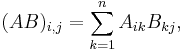

The matrix product is the most commonly used type of product of matrices. Matrices offer a concise way of representing linear transformations between vector spaces, and matrix multiplication corresponds to the composition of linear transformations. The matrix product of two matrices can be defined when their entries belong to the same ring, and hence can be added and multiplied, and, additionally, the number of the columns of the first matrix matches the number of the rows of the second matrix. The product of an m×p matrix A with an p×n matrix B is an m×n matrix denoted AB whose entries are

where 1 ≤ i ≤ m is the row index and 1 ≤ j ≤ n is the column index. This definition can be restated by postulating that the matrix product is left and right distributive and the matrix units are multiplied according to the following rule:

where the first factor is the m×n matrix with 1 at the intersection of the ith row and the kth column and zeros elsewhere and the second factor is the p×n matrix with 1 at the intersection of the lth row and the jth column and zeros elsewhere.

Properties

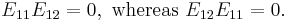

- In general, matrix multiplication is not commutative. More precisely, AB and BA need not be simultaneously defined; if they are, they may have different dimensions; and even if A and B are square matrices of the same order n, so that AB and BA are also square matrices of order n, if n is greater or equal than 2, AB need not be equal to BA. For example,

- However, if A and B are both diagonal square matrices of the same order then AB = BA.

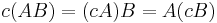

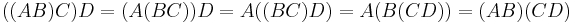

- Matrix multiplication is associative:

- Matrix multiplication is distributive over matrix addition:

- provided that the expression in either side of each identity is defined.

- For matrices with entries in a field, the matrix product is compatible with scalar multiplication:

- where c is a scalar.

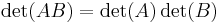

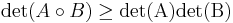

- If A and B are both nxn matrices with entries in a field then the determinant of their product is the product of their determinants:

- In particular, the determinants of AB and BA coincide.

- Let U, V, and W be vector spaces over the same field with certain bases, S: V → W and T: U → V be linear transformations and ST: U → W be their composition. Suppose that A, B, and C are the matrices of T, S, and ST with respect to the given bases. Then

- Thus the matrix of the composition (or the product) of linear transformations is the product of their matrices with respect to the given bases.

Illustration

The figure to the right illustrates the product of two matrices A and B, showing how each intersection in the product matrix corresponds to a row of A and a column of B. The size of the output matrix is always the largest possible, i.e. for each row of A and for each column of B there are always corresponding intersections in the product matrix. The product matrix AB consists of all combinations of dot products of rows of A and columns of B.

The values at the intersections marked with circles are:

In general, the matrix product is non-commutative. For example,

The element  of the above matrix product is computed as follows

of the above matrix product is computed as follows

The first coordinate in matrix notation denotes the row and the second the column; this order is used both in indexing and in giving the dimensions. The element  at the intersection of row

at the intersection of row  and column

and column  of the product matrix is the dot product (or scalar product) of row

of the product matrix is the dot product (or scalar product) of row  of the first matrix and column

of the first matrix and column  of the second matrix. This explains why the width and the height of the matrices being multiplied must match: otherwise the dot product is not defined.

of the second matrix. This explains why the width and the height of the matrices being multiplied must match: otherwise the dot product is not defined.

Product of several matrices

Matrix product can be extended to the case of several matrices, provided that their dimensions match, and it is associative, i.e. the result does not depend on the way the factors are grouped together. If A, B, C, and D are, respectively, an m×p, p×q, q×r, and r×n matrices, then there are 5 ways of grouping them without changing their order, and

is an m×n matrix denoted ABCD.

Alternative descriptions

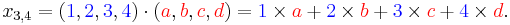

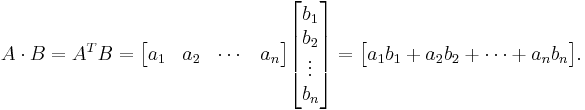

The Euclidean inner product and outer product are the simplest special cases of the matrix product. The inner product of two column vectors  and

and  is

is  , where T denotes the matrix transpose. More explicitly,

, where T denotes the matrix transpose. More explicitly,

The outer product is  , where

, where

Matrix multiplication can be viewed in terms of these two operations by considering the effect of the matrix product on block matrices.

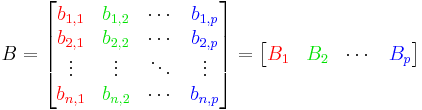

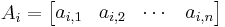

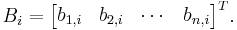

Suppose that the first factor, A, is decomposed into its rows, which are row vectors and the second factor, B, is decomposed into its columns, which are column vectors:

where

The method in the introduction was:

This is an outer product where the product inside is replaced with the inner product. In general, block matrix multiplication works exactly like ordinary matrix multiplication, but the real product inside is replaced with the matrix product.

An alternative method results when the decomposition is done the other way around, i.e. the first factor, A, is decomposed into column vectors and the second factor, B, is decomposed into row vectors:

This method emphasizes the effect of individual column/row pairs on the result, which is a useful point of view with e.g. covariance matrices, where each such pair corresponds to the effect of a single sample point. An example for a small matrix:

One more description of the matrix product may be obtained in the case when the second factor, B, is decomposed into the columns and the first factor, A, is viewed as a whole. Then A acts on the columns of B. If x is a vector and A is decomposed into columns, then

.

.

Algorithms for efficient matrix multiplication

The running time of square matrix multiplication, if carried out naively, is  . The running time for multiplying rectangular matrices (one m×p-matrix with one p×n-matrix) is O(mnp). But more efficient algorithms do exist. Strassen's algorithm, devised by Volker Strassen in 1969 and often referred to as "fast matrix multiplication", is based on a clever way of multiplying two 2 × 2 matrices which requires only 7 multiplications (instead of the usual 8), at the expense of several additional addition and subtraction operations. Applying this trick recursively gives an algorithm with a multiplicative cost of

. The running time for multiplying rectangular matrices (one m×p-matrix with one p×n-matrix) is O(mnp). But more efficient algorithms do exist. Strassen's algorithm, devised by Volker Strassen in 1969 and often referred to as "fast matrix multiplication", is based on a clever way of multiplying two 2 × 2 matrices which requires only 7 multiplications (instead of the usual 8), at the expense of several additional addition and subtraction operations. Applying this trick recursively gives an algorithm with a multiplicative cost of  . Strassen's algorithm is awkward to implement, compared to the naive algorithm, and it lacks numerical stability. Nevertheless, it is beginning to appear in libraries such as BLAS, where it is computationally interesting for matrices with dimensions n > 100[1], and is very useful for large matrices over exact domains such as finite fields, where numerical stability is not an issue.

. Strassen's algorithm is awkward to implement, compared to the naive algorithm, and it lacks numerical stability. Nevertheless, it is beginning to appear in libraries such as BLAS, where it is computationally interesting for matrices with dimensions n > 100[1], and is very useful for large matrices over exact domains such as finite fields, where numerical stability is not an issue.

The currently  algorithm with the lowest known exponent k is the Coppersmith–Winograd algorithm. It was presented by Don Coppersmith and Shmuel Winograd in 1990, has an asymptotic complexity of O(n2.376). It is similar to Strassen's algorithm: a clever way is devised for multiplying two k × k matrices with fewer than k3 multiplications, and this technique is applied recursively. However, the constant coefficient hidden by the Big O Notation is so large that the Coppersmith–Winograd algorithm is only worthwhile for matrices that are too large to handle on present-day computers.[2]

algorithm with the lowest known exponent k is the Coppersmith–Winograd algorithm. It was presented by Don Coppersmith and Shmuel Winograd in 1990, has an asymptotic complexity of O(n2.376). It is similar to Strassen's algorithm: a clever way is devised for multiplying two k × k matrices with fewer than k3 multiplications, and this technique is applied recursively. However, the constant coefficient hidden by the Big O Notation is so large that the Coppersmith–Winograd algorithm is only worthwhile for matrices that are too large to handle on present-day computers.[2]

Since any algorithm for multiplying two n × n matrices has to process all 2 × n² entries, there is an asymptotic lower bound of  operations. Raz (2002) proves a lower bound of

operations. Raz (2002) proves a lower bound of  for bounded coefficient arithmetic circuits over the real or complex numbers.

for bounded coefficient arithmetic circuits over the real or complex numbers.

Cohn et al. (2003, 2005) put methods, such as the Strassen and Coppersmith–Winograd algorithms, in an entirely different group-theoretic context. They show that if families of wreath products of Abelian with symmetric groups satisfying certain conditions exist, then there are matrix multiplication algorithms with essential quadratic complexity. Most researchers believe that this is indeed the case[3] - a lengthy attempt at proving this was undertaken by the late Jim Eve.[4]

Because of the nature of matrix operations and the layout of matrices in memory, it is typically possible to gain substantial performance gains through use of parallelisation and vectorization. It should therefore be noted that some lower time-complexity algorithms on paper may have indirect time complexity costs on real machines.

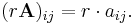

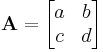

Scalar multiplication

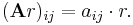

The scalar multiplication of a matrix A = (aij) and a scalar r gives a product r A of the same size as A. The entries of r A are given by

For example, if

then

If we are concerned with matrices over a more general ring, then the above multiplication is the left multiplication of the matrix A with scalar p while the right multiplication is defined to be

When the underlying ring is commutative, for example, the real or complex number field, the two multiplications are the same. However, if the ring is not commutative, such as the quaternions, they may be different. For example

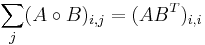

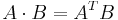

Hadamard product

For two matrices of the same dimensions, we have the Hadamard product (named after French mathematician Jacques Hadamard), also known as the entrywise product and the Schur product.[5]

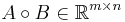

Formally, for two matrices of the same dimensions:

the Hadamard product A · B is a matrix of the same dimensions

with elements given by

Note that the Hadamard product is a principal submatrix of the Kronecker product.

The Hadamard product is commutative, and distributive over addition.

The Hadamard product of two positive-(semi)definite matrices is positive-(semi)definite[6], and for positive-semidefinite  and

and

.

.

For vectors  and

and  , and corresponding diagonal matrices

, and corresponding diagonal matrices  and

and  with these vectors as their leading diagonals, the following identity holds:[7]

with these vectors as their leading diagonals, the following identity holds:[7]

,

,

where  denotes the conjugate transpose of

denotes the conjugate transpose of  . In particular, using vectors of ones, this shows that the sum of all elements in the Hadamard product is the trace of

. In particular, using vectors of ones, this shows that the sum of all elements in the Hadamard product is the trace of  .

.

A related result for square  and

and  , is that the row-sums of their Hadamard product are the diagonal elements of

, is that the row-sums of their Hadamard product are the diagonal elements of  [8]

[8]

The Hadamard product appears in lossy compression algorithms such as JPEG.

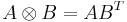

Kronecker product

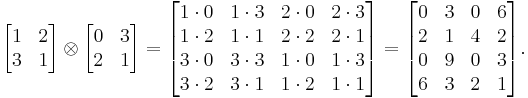

For any two arbitrary matrices A and B, we have the direct product or Kronecker product A ⊗ B defined as

If A is an m-by-n matrix and B is a p-by-q matrix, then their Kronecker product A ⊗ B is an mp-by-nq matrix.

The Kronecker product is not commutative.

For example

If A and B represent linear transformations V1 → W1 and V2 → W2, respectively, then A ⊗ B represents the tensor product of the two maps, V1 ⊗ V2 → W1 ⊗ W2.

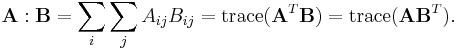

Frobenius inner product

The Frobenius inner product, sometimes denoted A:B is the component-wise inner product of two matrices as though they are vectors. In other words, it is the sum of the entries of the Hadamard product, that is,

This inner product induces the Frobenius norm.

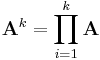

Powers of matrices

Square matrices can be multiplied by themselves repeatedly in the same way that ordinary numbers can. This repeated multiplication can be described as a power of the matrix. Using the ordinary notion of matrix multiplication, the following identities hold for an n-by-n matrix A, a positive integer k, and a scalar c:

The naive computation of matrix powers is to multiply k times the matrix A to the result, starting with the identity matrix just like the scalar case. This can be improved using the binary representation of k, a method commonly used to scalars. An even better method is to use the eigenvalue decomposition of A.

Calculating high powers of matrices can be very time-consuming, but the complexity of the calculation can be dramatically decreased by using the Cayley-Hamilton theorem, which takes advantage of an identity found using the matrices' characteristic polynomial and gives a much more effective equation for Ak, which instead raises a scalar to the required power, rather than a matrix.

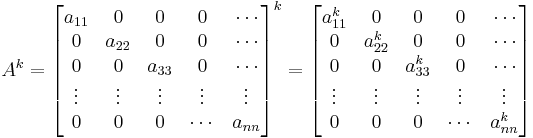

Powers of diagonal matrices

The power, k, of a diagonal matrix A, is the diagonal matrix whose diagonal entries are the k powers of the original matrix A.

When raising an arbitrary matrix (not necessarily a diagonal matrix) to a power, it is often helpful to diagonalize the matrix first.

See also

- Cracovian (for another matrix product)

- Kronecker product

- Strassen algorithm (1969)

- Winograd algorithm (1980)

- Coppersmith–Winograd algorithm (1990)

- Logical matrix

- Matrix chain multiplication

- Matrix inversion

- Composition of relations

- Basic Linear Algebra Subprograms

- Matrix addition

- Dot product

Notes

- ↑ Press 2007, p. 108.

- ↑ Robinson, Sara (2005), "Toward an Optimal Algorithm for Matrix Multiplication", SIAM News 38 (9), http://www.siam.org/pdf/news/174.pdf

- ↑ Robinson, 2005.

- ↑ Eve, 2009.

- ↑ (Horn & Johnson 1985, Ch. 5).

- ↑ (Styan 1973)

- ↑ (Horn & Johnson 1991)

- ↑ (Styan 1973)

References

- Henry Cohn, Robert Kleinberg, Balazs Szegedy, and Chris Umans. Group-theoretic Algorithms for Matrix Multiplication. arXiv:math.GR/0511460. Proceedings of the 46th Annual Symposium on Foundations of Computer Science, 23-25 October 2005, Pittsburgh, PA, IEEE Computer Society, pp. 379–388.

- Henry Cohn, Chris Umans. A Group-theoretic Approach to Fast Matrix Multiplication. arXiv:math.GR/0307321. Proceedings of the 44th Annual IEEE Symposium on Foundations of Computer Science, 11-14 October 2003, Cambridge, MA, IEEE Computer Society, pp. 438–449.

- Coppersmith, D., Winograd S., Matrix multiplication via arithmetic progressions, J. Symbolic Comput. 9, p. 251-280, 1990.

- Eve, James. On O(n^2 log n) algorithms for n x n matrix operations. Technical Report No. 1169, School of Computing Science, Newcastle University, August 2009. PDF

- Horn, Roger A.; Johnson, Charles R. (1985), Matrix Analysis, Cambridge University Press, ISBN 978-0-521-38632-6

- Horn, Roger A.; Johnson, Charles R. (1991), Topics in Matrix Analysis, Cambridge University Press, ISBN 978-0-521-46713-1

- Knuth, D.E., The Art of Computer Programming Volume 2: Seminumerical Algorithms. Addison-Wesley Professional; 3 edition (November 14, 1997). ISBN 978-0201896848. pp. 501.

- Press, William H.; Flannery, Brian P.; Teukolsky, Saul A.; Vetterling, William T. (2007), Numerical Recipes: The Art of Scientific Computing (3rd ed.), Cambridge University Press, ISBN 978-0-521-88068-8.

- Ran Raz. On the complexity of matrix product. In Proceedings of the thirty-fourth annual ACM symposium on Theory of computing. ACM Press, 2002. doi:10.1145/509907.509932.

- Robinson, Sara, Toward an Optimal Algorithm for Matrix Multiplication, SIAM News 38(9), November 2005. PDF

- Strassen, Volker, Gaussian Elimination is not Optimal, Numer. Math. 13, p. 354-356, 1969.

- Styan, George P. H. (1973), "Hadamard Products and Multivariate Statistical Analysis", Linear Algebra and its Applications 6: 217-240

External links

- The Simultaneous Triple Product Property and Group-theoretic Results for the Exponent of Matrix Multiplication

- WIMS Online Matrix Multiplier

- Matrix Multiplication Problems

- Block Matrix Multiplication Problems

- Matrix Multiplication in C

- Linear algebra: matrix operations Multiply or add matrices of a type and with coefficients you choose and see how the result was computed.

- Visual Matrix Multiplication An interactive app for learning matrix multiplication.